Case Study Q&A Tool

Building an AI-powered tool to convey the deeper value of design work: systems thinking, tradeoffs, and strategic intent

Overview

A custom AI assistant for deeper dives into design work

I built an embedded AI assistant that allows users to ask questions about projects in a conversational, on-demand way. It's currently only available for the Pulse Energy Engagement Dashboard case study, but will be expanded to other case studies soon.

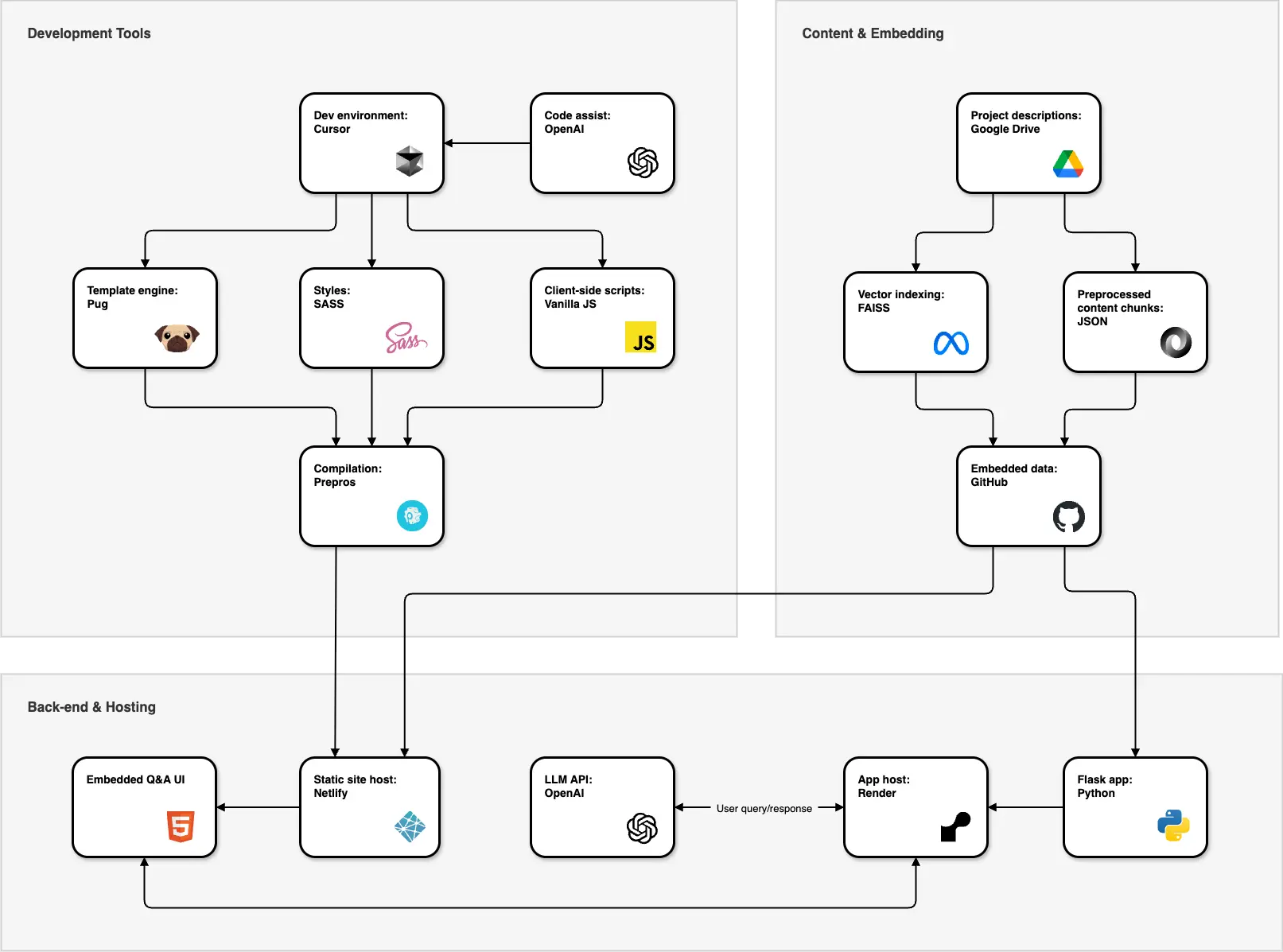

System architecture

Snapshot of how it's all stitched together

All product names, logos, and brands are property of their respective owners. All company, product, and service names used in this diagram are for identification purposes only. Use of these names, logos, and brands does not imply endorsement.

The system handles everything—from chunking and embedding with OpenAI to a Flask backend and static-site integration—giving users a way to ask design questions that can't be conveyed in screenshots.

How it works

How information is processed and presented

- Rough project notes and reflections are captured in freeform documents in ChatGPT and Google Drive.

- Notes are converted to

.txtfiles—one per case study—which serve as the input for downstream processing. - A custom

Pythonscript is used to break the content into structuredJSONchunks and generate vector embeddings via FAISS indexing. - The

.jsonchunk file and FAISS index are committed to GitHub, keeping the embedded data version-controlled and colocated with the frontend. - Using Cursor as a development environment and OpenAI for inline code assistance, the static site is built using:

- All front-end assets are compiled and bundled via Prepros, producing clean, deployable static files.

- The site is hosted on Netlify, which serves both the case studies and the embedded assistant UI.

- The backend logic runs on a Flask app written in

Python, hosted on Render, which exposes an API for handling user queries. - The assistant backend references the JSON and FAISS files directly from the GitHub repo, keeping content and logic decoupled but tightly aligned.

- Queries are routed from the static site to the Flask API, which fetches relevant chunks and sends them—along with the user's question—to OpenAI's LLM API.

- The LLM's response is returned to the static UI, completing the query cycle and surfacing context-aware answers drawn from your original project thinking.

- Submitted queries are logged to Airtable, allowing me to track usage and gather feedback. The assistant is then trained on the logged queries and feedback, allowing it to improve over time.

Why bother?

Because screenshots don't capture the full story

Much of the real value of good product design isn't easily captured in visuals—it's in the judgment, tradeoffs, and micro-decisions that shape the product and process. These are often difficult to convey in shorter, static case studies.

- →Traditional, design-focused case studies don't always show a designer's true impact—especially when it's rooted in systems thinking and cross-functional alignment

- →Design is shaped by the small-scale decisions that don't make headlines, but significantly impact product quality

- →I wanted to build a tool from scratch that met a real need and gave me full control over its design and architecture

- →It was a chance to work hands-on with LLMs, stretch my technical skills, and treat the assistant as a product in itself

The future is not about your ability to use Figma; it is about your judgment and your ability to make things happen.

What I wanted to achieve

Conveying more detailed insights

- →Let visitors explore case studies in greater depth than static content allows

- →Reveal the thinking, decisions, and priorities behind the work

- →Provide nuanced context without overwhelming the page or casual readers

- →Highlight the value of experience-based design judgment

- →Build a scalable, reusable layer of interactivity for long-form case studies

What I'm learning

This is a living project, still evolving as I experiment, refactor, and uncover new ways to improve its usefulness.

- → AI-powered tools have incredible potential to transform design work, but they're like any other design tool—they're only as good as the designer using them

- →Designing AI interfaces requires different guardrails than traditional UI

- →Prompt engineering IS product design—how you frame the assistant's role directly impacts user trust

- →Even small, self-directed tools benefit from a clear system architecture and modular design choices

- →Embedding != training—LLMs don't “know” your project; their usefulness depends on the quality of chunking, filtering, and framing

- →Building for yourself is a fast track to clarity—you can skip discovery, but not design thinking

Future improvements

Just a few of the many things I'd like to add/improve

- → Cleaner UI and interaction flowMake the usage disclaimer dismissable and improve how example questions are surfaced.

- →Spam prevention and reliabilityAdd basic abuse protection, improved error handling, and fallback states for failed queries.

- →Richer user experienceSupport recent question history, user-submitted feedback, and context-specific assistant views based on the case study.

- →Expanded functionalityIntroduce a general Q&A mode for broader portfolio queries and enable multi-case study context switching.

- ✓Feedback and improvement loopLog all user interactions to Airtable, generate periodic digests, and use OpenAI to analyze feedback and suggest refinements to the assistant's training. [Added Jul 31, 2025]

- ✓Loading state messagesTo keep users informed, display a series of messages during answer generation, especially when the waiting times are longer than expected. [Added Aug 3, 2025]